RumexWeeds: A Grassland Dataset for Agricultural Robotics.

Abstract: Computer vision can lead towards more sustainable agricultural production by enabling robotic precision agriculture. Vision-equipped robots are being deployed in the fields to take care of crops and control weeds. However, publicly available agricultural datasets containing both image data as well as data from navigational robot sensors are scarce. Our real-world dataset RumexWeeds targets the detection of the grassland weeds: Rumex obtusifolius L. and Rumex crispus L.. RumexWeeds includes whole image sequences instead of individual static images, which is rare for computer vision image datasets, yet crucial for robotic applications. It allows for more robust object detection, incorporating temporal aspects and considering different viewpoints of the same object. Furthermore, RumexWeeds includes data from additional navigational robot sensors — GNSS, IMU and odometry — which can increase robustness, when additionally fed to detection models. In total the dataset includes 5,510 images with 15,519 manual bounding box annotations collected at 3 different farms and 4 different days in summer and autumn 2021. Additionally, RumexWeeds includes a subset of 340 ground truth pixels-wise annotations. The dataset is publicly available at RumexWeeds. In this paper we also use RumexWeeds to provide baseline weed detection results considering a state-of-the-art object detector; in this way we are elucidating interesting characteristics of the dataset.

Example Sequences

20210806_hegnstrup - Sequence 17

20210806_stengard - Sequence 8

20210807_lundholm - Sequence 23

Image Annotations

Ground truth annotations

Weed enclosure with bounding box

For all labeled image sequences, bounding box annotations are generated manually for the grassland weed Rumex. Each bounding box includes the whole plant with all attached leaves. If the plant consists of only one leaf, the single leaf is enclosed by a bounding box. On very dense weed images, it can be difficult to identify all plants with high certainty. Here, noisy labels can be expected. We differentiate between the two relevant sub-species Rumex obtusifolius L. and Rumex crispus L. and assign the relevant class to each bounding box. Both sub-species are equally undesired on dairy grassland fields and therefore, for most application it stands to reason to treat both species as one class. The decision for one of the above-mentioned classes was made purely based on the visual appearance in the images. [1]

Pixel-wise weed segmentation Masks

Additionally, we provide a small number of carefully manually-annotated ground truth masks for a random subset of 20 images per location and day, resulting in 100 images and 340 segmented bounding box crops in total. [1]

Joint-stem with ellipse

We supplement the data of that dataset with additional manually created keypoint annotations: For each bounding box in the dataset, a joint stem annotation has been performed. The joint stem annotation is represented with an ellipse, where the center indicates the position and the ellipse shape indicates the uncertainty of the human annotator. [2]

Summary

The following table summarizes the datapoints that have been collected and annotated on four different days and at three different farms. We show the number of foreground images – including one or more Rumex objects –, the number of pure background images, the total number of annotations for both classes (Rumex obtusifolius L., Rumex crispus L.), the total proportion of positive pixels vs all image pixels, as well as the average bounding box size as a percentage of the whole image size. Although we have a significantly higher number of foreground images containing at least one object, the Rumex plant is still highly under-represented when considering the positive pixel proportion for each data collection session. Moreover, it lists the exact number of pixel-wise annotations for both object classes and each data collection session. [1]

| ID | 20210806_hegnstrup | 20210806_stengard | 20211006_stengard | 20210807_lundholm | 20210908_lundholm | |

|---|---|---|---|---|---|---|

| General | Date | Aug. 06, 2021 | Oct. 11, 2021 | Aug. 7, 2021 | Sep. 8, 2021 | |

| Farm Name | Hegnstrup | Stengard | Lundholm | |||

| Location | 55°50'12.5"N 12°22'57.8"E |

55°50'24.5"N 12°12'46.8"E |

55°56'36.7"N 12°10'09.0"E |

|||

| # sequences | 18 | 21 | 16 | 29 | 14 | |

| # FG images | 454 | 1459 | 1207 | 786 | 962 | |

| # BG images | 126 | 75 | 61 | 342 | 38 | |

| Bounding Box Annotation |

# Rumex obtusifolius |

563 | 3653 | 4358 | 774 | 3208 |

| # Rumex crispus | 89 | 1296 | 1142 | 264 | 172 | |

| pos. pixel proportion |

7.57 % | 5.66 % | 6.22 % | 4.11 % | 12.90 % | |

| avg. object size |

6.74 % | 1.75 % | 1.44 % | 4.47 % | 3.82 % | |

| Pixel-wise Annotation |

# Rumex obtusifolius |

23 | 66 | 92 | 17 | 71 |

| # Rumex crispus | 3 | 16 | 41 | 7 | 4 |

|

Automatic generation pixel-wise annotations for the remaining bounding boxes.

Bounding Box annotations provide a rough position of the weed plant in the image. For some applications, weed segmentation is crucial in order to estimate a more precise plant size and position. Unfortunately, manual pixel-wise object annotations are especially expensive to obtain for large amounts of training data. Therefore, we generated additional segmentation masks automatically, based on a very small amount of carefully manually-annotated ground truth masks. We hypothesise, that these few ground truth segmentation masks are sufficient to generate rough masks for the remaining bounding boxes in the dataset. Performing segmentation predictions for bounding box crops simplifies the problem to a great extent, because we only need to differentiate between foreground and background, while the foreground mask inherits the label of the bounding box. Furthermore, it is generally expected that no other negative plant objects with similar features (e.g. Taraxacum, Cirsium) lay within the bounding box crops. The manually segmented bounding box crops are used to train a segmentation network and generate segmentation masks for all remaining bounding box crops. [1]

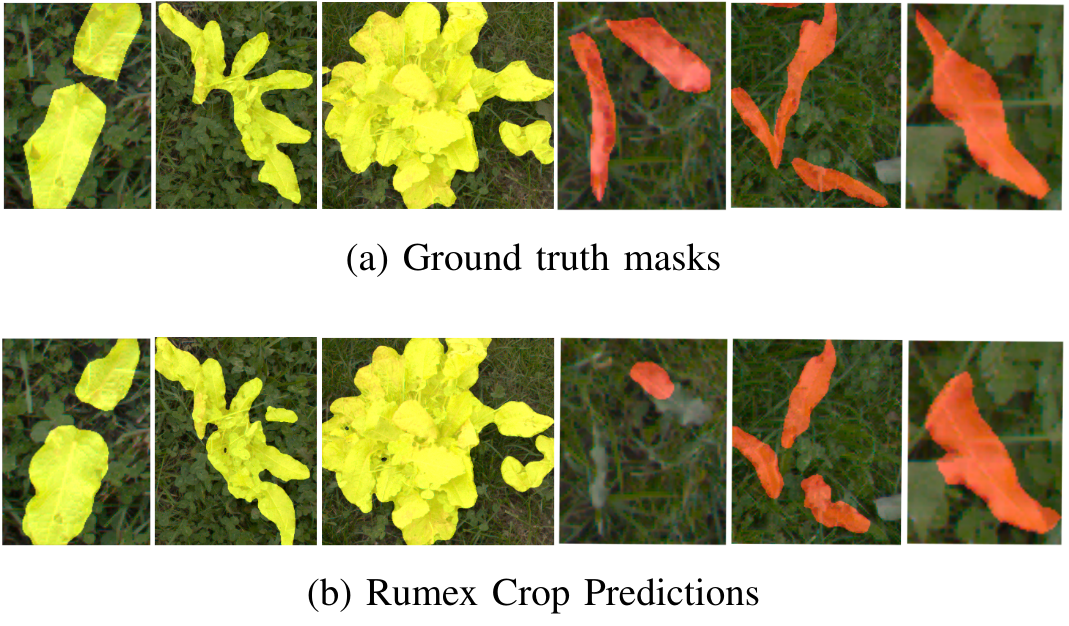

The final model achieves an mIoU of 0.776 on the test–set. In the following figure, we show some qualitative results of the test–set with ground truth masks in the top row and model predictions in the bottom row. The majority of segmentation masks are precise enough in order to determine an approximate plant size as well as the center location of the plant. When the plant leaves are thin and resemble grass structure, the predictions are less reliable, which mainly occurs for the species Rumex crispus L.. [1]

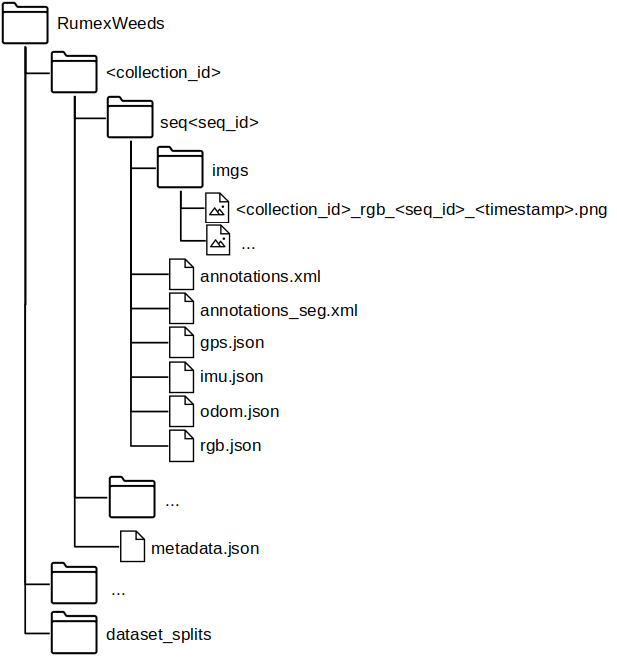

File Structure of .zip

RumexWeeds contains 5 subfolders—one for each dataset collection session. Each dataset collection contains an arbitrary number of sequences, while each sequence consists of a number of consecutive images at 5 FPS. The corresponding datapoints of IMU, GNSS and Odometry are saved as dictionary in the seq< seq_id >/*.json files. We simply save the corresponding ROS message as dictionary, which is sensor_msgs/NavSatFix.msg for the GNSS, sensor_msgs/Imu.msg for the IMU and nav_msgs/Odometry.msg for the Odometry data. The images and the navigational data are linked via the image file name, which serves as key in the json-dictionary. In other words all sensor points with the same key are time-synchronized. The file seq< seq_id >/rgb.json simply contains the timestamp in the standard timestamp format and is therefore redundant with the timestamp information embedded in the image name. The seq< seq_id >/annotations.xml contains all ground truth (i.e. performed by a human) bounding box and segmentation annotations for the corresponding image sequence. Supplementary, the seq< seq_id >/annotations_seg.xml contains all automatically generated pixel-wise annotations. The format of the annotations follows the conventions of the CVAT annotation tool version 1.1. Finally, the metadata.json: includes information concerning all sequences within one data collection < collection_id >. It contains transforms from base_link to the three sensors: → camera_link, → imu_link, → base_gnss as well as the transform base_footprint → base_link. The base_footprint-frame is positioned on the ground, while the base_link-frame lays within the robot, therefore giving insight on the robot height above ground. The folder dataset_splits contains dataset splits in order to compare ones results to each other in future works.

Getting started: Pytorch Dataset Class

Download data

wget https://data.dtu.dk/ndownloader/files/39268307

The Pytorch Datasets allows an easy entrypoint to work with the dataset. To visualize some example images, please run.

python rumex_weeds/visualize_img_data.py --data_folder <path-to-your-extracted-RumexWeeds-folder> --num_images <number-of-images-to-display> --visualize_type <bbox/gt_mask/mask/ellipse/all>

References

[1] Ronja Güldenring, Frits van Evert and Lazaros Nalpantidis,RumexWeeds: A grassland dataset for agricultural robotics., Journal of Field Robotics, 2023

[2] Jiahao Li, Ronja Güldenring, and Lazaros Nalpantidis, Real-time joint-stem prediction for agricultural robots in grasslands using multi-task learning., vol. 13, no. 9, Agronomy, 2023

Citation

If you find this work useful in your research, please consider citing:

@article{https://doi.org/10.1002/rob.22196,

author = {Güldenring, Ronja and van Evert, Frits K. and Nalpantidis, Lazaros},

title = {RumexWeeds: A grassland dataset for agricultural robotics},

journal = {Journal of Field Robotics},

keywords = {agricultural robotics, grassland weed, object detection, precision farming, robotics dataset, Rumex crispus, Rumex obtusifolius},

doi = {https://doi.org/10.1002/rob.22196},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1002/rob.22196},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1002/rob.22196}

}

@Article{agronomy13092365,

AUTHOR = {Li, Jiahao and Güldenring, Ronja and Nalpantidis, Lazaros},

TITLE = {Real-Time Joint-Stem Prediction for Agricultural Robots in Grasslands Using Multi-Task Learning},

JOURNAL = {Agronomy},

VOLUME = {13},

YEAR = {2023},

NUMBER = {9},

ARTICLE-NUMBER = {2365},

URL = {https://www.mdpi.com/2073-4395/13/9/2365},

ISSN = {2073-4395},

DOI = {10.3390/agronomy13092365}

}